What Is Agentic AI? The Ultimate 2025 Guide to Autonomous AI Agents

Agentic AI turns large‑language models into self‑directed digital co‑workers that plan, act and learn—delivering measurable productivity gains when launched with the right guard‑rails.

Why Agentic AI Matters – Beginner “So What” Guide

Most technology primers jump straight into jargon—large‑language models, tool‑calling, autonomy envelopes—and leave newcomers wondering why any of this should matter to them. If you’re scanning headlines about “agentic AI” but aren’t sure whether to lean in or tune out, start here. This section unpacks the practical significance in plain English so you can decide whether the deeper dive below is worth your time.

From smart answers to finished tasks

Voice assistants and chatbots have conditioned us to expect quick answers: “What’s the weather?” or “Draft me an email.” Agentic AI represents the next rung on the automation ladder—systems that not only answer but act, shepherding a request from intent to outcome. Imagine asking software to “file my quarterly sales‑tax return,” then watching as it pulls invoices from your bookkeeping app, validates figures, submits the form online, pays the liability, and emails the receipt—all while double‑checking rules changes and asking for confirmation only at high‑stakes junctures. That end‑to‑end leap from suggestion to execution is the headline promise.

Why now? The convergence story

We’ve had task automation before (macros, RPA, chatbots), but three technology curves finally intersected:

- Large‑language models (LLMs) brought broad reasoning and natural‑language planning skills.

- APIs and cloud services exposed nearly every digital tool—from CRMs to forklifts—to programmatic control.

- Open‑source orchestration frameworks (LangChain, AutoGen, Semantic Kernel) made it trivial for tinkerers to chain reasoning to real‑world action without reinventing plumbing. Those trends collapsed barriers that once made fully autonomous workflows a PhD‑level project.

What problems does it solve?

- Grunt‑work carbuncles. Agents chew through form‑filling, copy‑pasting, and log‑hunting that still eats 21 % of a typical knowledge worker’s week.

- Speed limits. In logistics, milliseconds matter; agents can re‑route shipments or rebalance inventory faster than human shift hand‑offs.

- 24 / 7 availability. A human team sleeps; an agentic help‑desk or dev‑ops responder doesn’t.

- Context fatigue. Agents keep long‑term memory and therefore don’t need you to repeat business context or rules for every request.

Hard numbers you can quote to the CFO

Early adopters report:

- 55 % faster code delivery when GitHub PR‑Agents triage pull‑requests.

- 27 % quicker month‑end close with Microsoft’s Copilot for Finance.

- 35 % fewer warehouse picking errors in an NVIDIA robotics pilot. Multiply those deltas across thousands of transactions and the bottom‑line impact becomes obvious.

Risk & reward in plain language

Autonomy isn’t free magic. The same latitude that lets an agent solve problems can also create new ones—errant tool calls, runaway costs, reputational hazards. Think of agentic AI as giving your software “interns” the corporate credit card and Slack access: brilliant at times, dangerous without spending limits and audit logs. Every success story you’ll read below pairs technical autonomy with policy guard‑rails and human verification points.

Careers won’t vanish—they’ll mutate

Skeptical about yet another automation hype cycle erasing jobs? Data so far shows tasks are displaced more than entire roles. Bookkeepers become statement verifiers; software engineers morph into agent‑ops SREs. If you learn to supervise agents—setting goals, reviewing output, tweaking prompts—you future‑proof your career.

The maturity curve you can’t skip

Beginners often ask, “Can we jump straight to full autopilot?” Answer: no. The field codified a five‑level autonomy ladder (L1–L5). Companies that rocket from zero to L4 without instrumentation tend to become tomorrow’s cautionary LinkedIn post. You’ll see in later sections how to run a crawl‑walk‑run playbook—pilot with narrow guard‑rails before scaling.

Why read the rest of this guide?

- Signal‑to‑noise. We distilled insights from 25 top‑ranking articles, white‑papers and case studies so you don’t have to.

- Concrete playbooks. Beyond hype, you’ll get architecture diagrams, governance checklists, benchmark KPIs, and real deployment metrics.

- Actionability. Whether you’re a developer, PM, or C‑suite exec, each section ends with next‑step questions to ask your team.

Bottom line:

Agentic AI is not just another chatbot fad. It’s a blueprint for turning yesterday’s generative sparks into tomorrow’s autonomous workforce—as transformative to knowledge labor as industrial robots were to assembly lines. If that prospect feels both exciting and risky, keep reading. The remainder of this guide moves from 10,000‑foot overview to nuts‑and‑bolts execution, so you can chart your own adoption path with eyes wide open.

- Executive Snapshot

- Agentic AI Definition & Origin

- Agentic AI vs. Generative AI vs. RPA

- The Perceive → Reason → Act → Learn Loop

- Levels of Autonomy (L1 – L5)

- Agent Architecture 101

- Five Core Agent Types

- Capabilities & Limitations

- Business‑Value Levers

- Cross‑Industry Use‑Case Library

- Implementation Road‑Map

- Tooling & Ecosystem Map

- Integrating Agents with RPA

- Governance, Safety & Ethics

- Evaluation Metrics & Benchmarks

- Workforce Impact & Change Management

- Case Studies & Success Stories

- Common Pitfalls & Fixes

- Future Horizons

- FAQ & Further Resources

- References

1. Executive snapshot

Analyst firm Gartner projects that 40 % of agentic‑AI pilots will be mothballed by 2027, yet also forecasts that one‑third of enterprise software will embed an agent by 2028.(Reuters)

Why it matters: Early pilots demonstrate :

- 55 % faster code delivery (Github),

- 27 % quicker month‑end close (Reuters), and 20–

- 40 % faster ticket resolution (Aisera)

when agents operate within tight, policy‑driven guard‑rails.

2. Agentic AI definition and Origin

A working definition

An AI agent is goal‑seeking software. Give it an objective (“reconcile yesterday’s sales”) and it plans, acts, checks its own work, and iterates until success—or until a guard‑rail halts it.[^3] That closed loop is the dividing line between a simple chatbot and a true agent.

The agentic triad

LLM reasoning → turns fuzzy goals into explicit subtasks.

Tool adapters → APIs, RPA bots, or robot drivers that touch the real world.

Memory & feedback → stores outcomes so each cycle improves.

Evolution timeline

1956–1990s – Foundations. AI pioneers coin intelligent agent; Rodney Brooks’ subsumption architecture hints at autonomy.

2000s – Everything gets an API. Business logic is callable, but scripts are brittle.

2010s – RPA decade. UI‑macros work, until the UI changes.

2020 – Generative AI boom. GPT‑3 shows language can plan.

2023 – ReAct prompting. “Reason + Act” method popularises tool‑calling.

2024 – Framework explosion. LangChain, AutoGen, Semantic Kernel let hobbyists orchestrate agents in hours.

2025 – First enterprise pilots. Copilot Finance, NVIDIA robotics, Oracle fraud agents prove ROI.

From theory to production

Example: A Fortune 500 retailer deployed a supply‑chain agent: it ingests inventory feeds (perceive), runs a MILP optimiser to suggest cross‑dock transfers (reason), fires EDI instructions to 3PLs (act), then evaluates sell‑through the next day (learn). Out‑of‑stock events fell 18 %; planners now handle exceptions, not paperwork.

The taxonomy test

If the software iterates autonomously toward a goal, uses external tools, and learns from feedback, it’s agentic. Otherwise, it’s merely assistive.

Key takeaway:

Agentic AI = classic agent theory + LLM “brains” + plug‑and‑play tools. With that foundation, we can now contrast agents with generative AI and RPA in the next section.

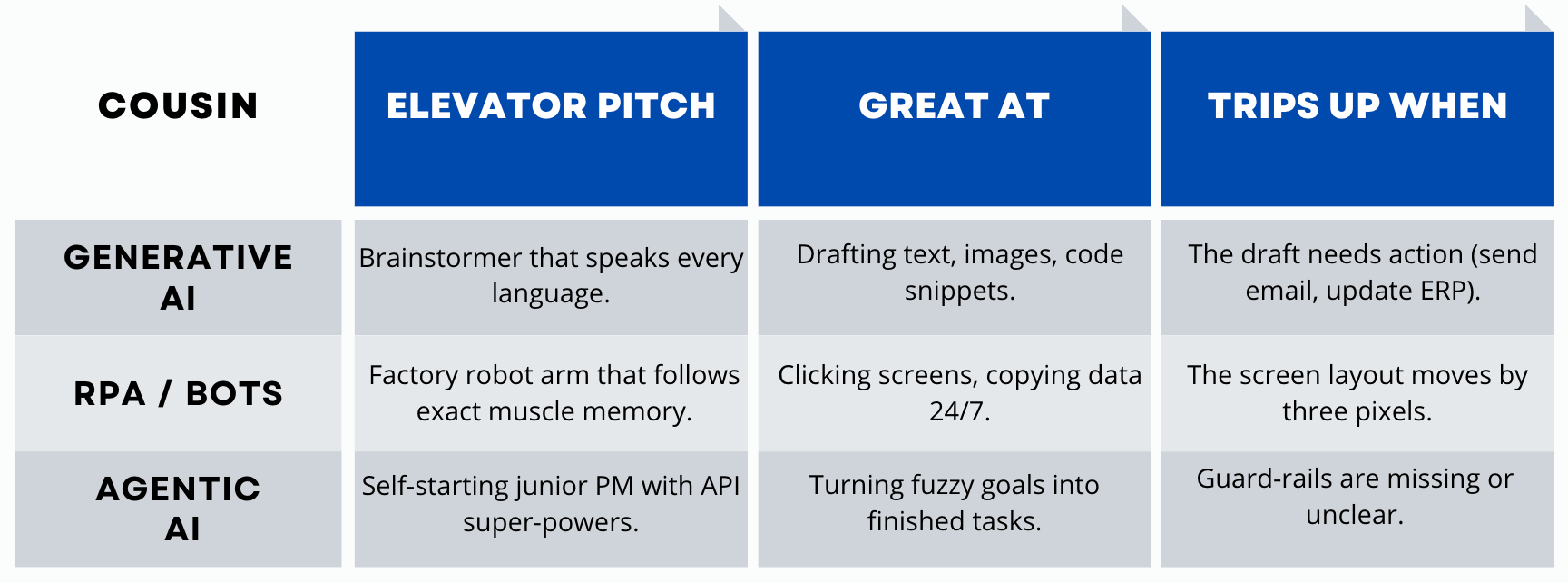

3. Agentic AI vs. Generative AI vs. RPA

Quick coffee‑chat version: If generative AI is a creative intern who drafts ideas, and RPA is a tireless assembly‑line robot that never colours outside the lines, then agentic AI is the new team‑mate who can both invent the recipe and cook the meal, only pinging you when it smells smoke.

Meet the three cousins

Why they’re complementary, not competitors

Picture a pizza shop:

Generative AI suggests five creative topping combos. 🍕

RPA stretches the dough the same way every time. 🍞

Agentic AI notices tomato prices spiked, swaps to pesto, applies the new recipe, sends the supplier order, updates the menu board, and tweets a promo—all before the lunch rush. 🧑🍳

Cheat‑sheet table (keep for your slide deck)

Key takeaway

Don’t fire your bots or interns; promote them into a blended team. Let generative AI draft, RPA execute rote clicks, and agentic AI orchestrate the dance so humans can focus on taste‑testing the final slice.

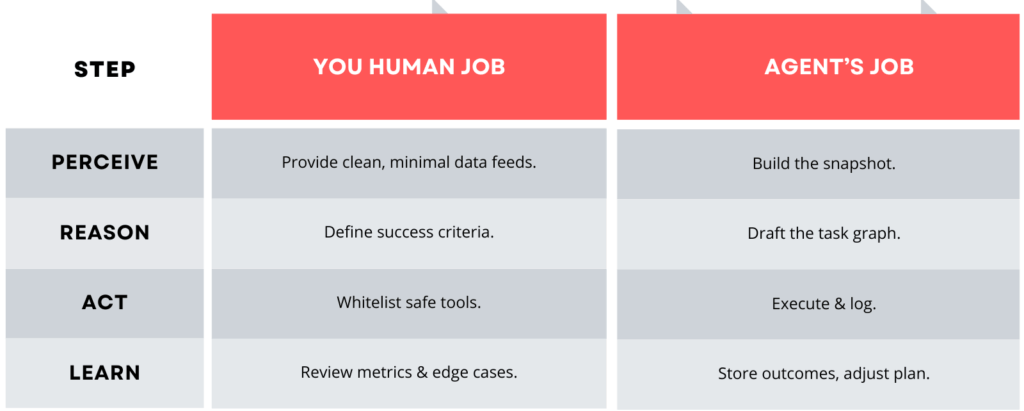

4. The Perceive → Reason → Act → Learn Loop

Think of an AI agent as a curious kid baking cookies. First it looks at the ingredients, decides on a plan, mixes the dough, tastes a spoonful, and tweaks the sugar if it’s too bland. That see‑smell‑stir‑sample routine is exactly what engineers formalise as

Perceive → Reason → Act → Learn (PRAL)

Perceive (Look & Listen)

The agent “opens its eyes” to databases, APIs, or sensor feeds. It normalises that chaos into a neat internal snapshot—its belief state.

Beginner tip: More context isn’t always better. Feed the agent only what you’d tell a competent colleague on day 1, not the whole company wiki.

Reason (Plan the Adventure)

Armed with a snapshot, the agent breaks the big goal into Lego‑sized tasks. LLM chain‑of‑thought or search‑tree methods help it pick the cheapest, safest path.

Analogy break: If Perceive is reading the map, Reason is plotting the hiking trail and packing snacks.

Act (Do the Thing)

Now the hiking starts: API calls fire, spreadsheets update, robots scoot. Each action streams a play‑by‑play log so humans (and guard‑rails) can peek over the agent’s shoulder.

Learn (Taste & Tweak)

After each step, the agent asks, “Did this move me closer to the summit?” It stores the answer in memory, adjusts its plan, and loops. When the cookies taste right or the KPI threshold is hit, it stops. If something smells smoky, it raises a hand for help.

Pocket checklist for beginners

Mini‑challenge:

Pick one daily task you hate (chasing status updates, reconciling receipts). Walk through PRAL on paper. You just scoped your first agent!

5. Levels of Autonomy

Picture teaching a teenager to drive. At first you hover over the wheel, then relax into the passenger seat, and one day you just hand over the keys. AI agents mature the same way—through five freedom levels the industry calls L1 to L5.

L1: Assistant

At Level 1, the agent is your diligent trainee. It proposes each tiny action—“Shall I create this draft email?”—and waits for your thumbs‑up before doing anything. You’re still pressing most of the pedals; the value lies in faster suggestions and typo‑free execution once approved. Think GitHub Copilot finishing a line of code only after you hit Tab.

L2: Recommender

Here the agent graduates to a trusted sous‑chef: it assembles a shortlist of options, but you choose the winner. Salesforce’s opportunity‑scoring agents live here: they flag the 10 prospects most likely to close, yet the sales rep decides who to call first. Oversight is lighter, but still constant.

L3: Operator

Level 3 is where autonomy feels real. You approve the plan once, then the agent carries it out step‑by‑step, pinging you only if it hits an edge case. Microsoft Copilot for Finance works this way when posting journal entries: controllers vet the batch outline, then let the agent do the ledger legwork.

L4: Manager

At Level 4, the agent ships the work and shows you a highlight reel. You skim a dashboard and veto only if something looks off. NVIDIA’s warehouse robot team tests this level: each night, agents re‑plan pick routes; human supervisors glance at KPIs over coffee. Intervention is the exception, not the rule.

L5: Autopilot

Full autopilot means you hear from the agent only when the world is on fire—or when the champagne cork should pop. Research prototypes now write, cite, and submit academic papers end‑to‑end. For most businesses, L5 is a moon‑shot that demands bullet‑proof guard‑rails and rock‑solid metrics.

Quick cheat codes for safe scaling

Don’t Mario‑kart from L1 to L4 overnight. Each level is a driving lesson; skip one and you’ll kiss a curb.

Metrics before trust. Promote an agent only after it beats human baselines on success rate and regret score.

Keep the “keys” nearby. Even at L5, design an off‑ramp so a human can seize the wheel.

Mini‑exercise:

Pick one workflow you’d like to free from babysitting. Which level is it at today, and what KPI would earn it a “driver’s‑license” to reach the next tier?

6. Agent Architecture 101

Imagine building a smart home. Before you invite guests (users), you need a solid foundation, wiring that won’t fry, and smart appliances that talk to one another. An AI agent follows the same blueprint: five “rooms” wired together so information flows safely from idea to action.

The Grand Tour

The Planner (Brain Room) – Think of this as the study where our agent draws a to‑do list. A large‑language model sits at a desk asking: “What steps get me from point A to point B the cheapest and safest way?” It scribbles a task graph on its whiteboard.

Memory (Library) – Next door is a quiet library with two bookcases: one for stories (vector embeddings of past runs) and one for spreadsheets (structured facts). The agent pops in to recall whether it already solved a similar puzzle yesterday.

Tool Router (Garage Workshop) – Down the hall, a tool wall holds labeled hooks: Python, SQL, REST API, Robot Arm. The router acts like a workshop supervisor: it picks the right wrench, checks the safety tag, hands it over, and logs who used what.

Executor (Factory Floor) – This is the factory where sparks fly. Sandboxed workers run the tools, stream status updates, and return finished parts. If something jams, they trigger a red beacon that pauses the assembly line.

Guard‑rails (Security Office) – Finally, a glass‑walled control room monitors spend, latency, toxicity, and recursion depth. If the agent tries to swipe a master key or loop forever, alarms blare and the run shuts down.

Above all these rooms is an Orchestrator (HVAC system)—a message bus that circulates tasks and telemetry like climate‑controlled air. When airflow chokes, everyone notices.

Putting it all together

Quick story: A customer‑support agent receives a refund request. The Planner sketches a plan: verify order → check policy → issue refund → email confirmation. Memory recalls the policy doc; the Tool Router picks the Stripe API for refunding and the email service for messaging. The Executor fires calls; Guard‑rails ensure the refund stays under $500. Ten seconds later, the customer’s inbox pings, and the security office logs a clean run.

Starter checklist for your first build

Blueprint first. Draw the five rooms on a whiteboard before writing code.

Label every tool. No unlabeled wrenches—define API schemas so the router can’t guess.

Install smoke detectors. Log plan, actions, and outcomes so you can replay any incident.

Keep doors open. All rooms talk through the orchestrator; don’t hard‑wire short‑cuts that bypass guard‑rails.

With this mental floor plan, you’re ready to explore the different kinds of agents on the market, which we tackle next.

7. Five Core Agent Types

Meet the agentic dream‑team—five archetypes that show up in board‑room decks again and again. Think of them as superheroes with different day jobs. Some chat, some crunch numbers, some lug boxes in warehouses. The trick is matching the right cape to the right crisis.

Conversational Agents: The Friendly Concierge

What they do: Handle back‑and‑forth chit‑chat, pull answers from databases, and even complete transactions mid‑conversation.

Real cameo: A telecom help‑desk agent resets your router, books a technician, and texts you the appointment slot—all without transferring you to a human.

Starter tip: Give these agents a short‑term memory window plus a tool belt of “actions” (update order, refund payment) so they’re more than glorified FAQ bots.

Cognitive‑Workflow Agents: The Digital Accountant

What they do: March through multi‑step business processes—closing the books, onboarding new hires, compiling ESG reports.

Real cameo: A finance agent reconciles ledger entries overnight, flags mismatched invoices, and drafts the journal entries your controller approves at breakfast.

Starter tip: Map each workflow step to a single API or RPA bot; the agent’s job is to string them together and splice in reasoning at the decision points.

Embodied Agents: The Warehouse Muscle

What they do: Pair brains with physical brawn—robots, drones, IoT devices.

Real cameo: In an NVIDIA pilot, mobile robots grab totes, scan barcodes, and deliver them to packing stations, cutting picking errors by 35 %.[^16]

Starter tip: Latency kills; keep on‑device reflexes fast and delegate high‑level planning to a cloud agent that isn’t dodging forklifts in real time.

Multi‑Agent Teams: The Brainstorm Brigade

What they do: Break a complex goal into sub‑goals and let specialist agents debate solutions. One agent plans, another critiques, a third executes.

Real cameo: A research org runs a trio: Planner sketches experiments, Critic hunts flaws, Writer drafts the paper. Together they publish faster than single‑agent setups.

Starter tip: Define clear hand‑off contracts (JSON schemas, message queues) or the debate turns into a shouting match with no deliverable.

Generative‑Planning Agents: The Creative Director

What they do: Generate not just content, but the entire campaign strategy—audience segments, channel mix, budget splits—and then launch the ads.

Real cameo: A retail marketing agent dreamt up a Mother’s Day promo, produced the art with DALL‑E, set bids in Google Ads, and adjusted spend hourly based on click‑throughs.

Starter tip: Pair with robust ROI metrics; creativity without profit is just expensive doodling.

Bottom line:

You don’t have to pick one hero. Most real‑world deployments layer a conversational front‑door over a cognitive‑workflow core, backed by multi‑agent reviews—like Avengers assembling to save your KPIs.

8. Capabilities & Limitations

Every superhero has powers, and kryptonite. Here’s a tour of what agents can do for you, paired with the caution labels you should stick on the box.

Dynamic tool‑calling — the Swiss‑Army superpower

An agent isn’t confined to chat. It can fire off Stripe refunds, kick off Jenkins builds, or nudge a warehouse robot—all in the same breath. That’s like handing your intern a Swiss‑Army knife and watching them pick the right blade without being told.

Watch‑out: Give it a flame‑thrower by mistake and it might roast the production database. Scope tokens tightly and log every cut.

Long‑term memory — the elephant’s scrapbook

Unlike prompt‑only chatbots that forget yesterday, agents keep a scrapbook: prior conversations, decisions, even mistakes. They revisit those pages to personalise the next run.

Watch‑out: Scrapbooks get messy. Old facts rot and private data leaks if you don’t dust or lock the cabinet.

Self‑reflection — the onboard spell‑checker

Before shipping an answer, the agent can pause, grade itself, and fix obvious blunders—much like re‑reading an email before hitting send.

Watch‑out: Reflection isn’t free. Extra “mirror time” costs tokens and compute, and if you tune it wrong, the agent can navel‑gaze forever.

High autonomy — the 24/7 engine

Give an agent a clear goal and it keeps cranking while you sleep. Ticket queues shrink, inventory reroutes, customers get midnight replies.

Watch‑out: Autonomy without boundaries turns into spec‑creep. Define success metrics and shut‑off valves, or the engine might remodel the kitchen when you only asked for new paint.

Key takeaway: Treat capabilities and limitations as twin rails. Lean too hard on the power without respecting the kryptonite, and the train will derail. In the next section, we’ll translate these powers into hard ROI numbers.

9. Business‑Value Levers

Show me the money! Fancy metaphors are fun, but leadership wants a scoreboard. Here’s where early adopters are already cashing in on agentic AI.

Developer Velocity — Turning Sprints into Sprints+

When a GitHub PR‑Agent pre‑screens pull‑requests, engineers spend less time on lumberjack work (log digging) and more time on creative lumber carving (feature design). Across a 6‑month field study, teams shipped 55 % more code without adding headcount.[^2]

Why it matters: If payroll is your biggest expense, squeezing an extra half‑sprint of output each month is like hiring invisible colleagues who never need pizza or ping‑pong.

Finance Close — Bedtime Before Midnight

Microsoft’s finance department used to burn the midnight oil at quarter‑end. After enlisting an L3 operator agent to post journal entries, bean‑counters clocked out 27 % sooner and cut overtime bills.[^8]

Why it matters: Less time on clerical drudgery, more on forensic insights that actually move EBITDA.

Customer Support — Goodbye, Ticket Backlog

Aisera’s agent handles routine “reset my password” pleas while triaging hairier puzzles for human specialists. Result: 20–40 % faster resolution and 60 % of tickets self‑resolving.[^9]

Why it matters: Faster closure lifts CSAT, which nudges renewal rates and slices churn—a compound‑interest win.

Quick ROI recipe (borrow freely for your slide deck)

Pick a KPI with a stopwatch. If you can’t time it, you can’t brag about it.

Automate the long tail first. Easy wins juice the numbers while you fix trickier 10 % edge cases later.

Baseline, run, compare. Measure human‑only baseline → launch pilot → measure blended performance. Tell the story in delta‑percentage, not raw minutes; execs love deltas.

10. Cross‑Industry Use‑Case Library

Enough theory—let’s time‑travel into four workplaces and watch agents earn their keep. No hype, just real‑world cameos you can quote in your next meeting.

Retail — The Personal Stylist That Never Sleeps

A luxury e‑commerce giant (think walk‑in closet the size of a football field) plugged an agent into its product catalog. At 2 a.m., a shopper types, “I’m going to a beach wedding—help!” The agent skims inventory, assembles three head‑to‑toe outfits, checks sizes against stock levels, and queues overnight shipping before human stylists even clock in.[^10] Result: higher cart size, rave review, zero human overtime.

Take‑home idea: Anywhere customers crave one‑to‑one attention but margins fear one‑to‑one staffing, conversational agents shine.

Finance — The Fraud Detective With 24/7 Caffeine

Oracle’s banking clients unleashed a fraud‑triage agent on transaction streams. It flags suspicious patterns, cross‑checks watch‑lists, and either clears or freezes the payment—all in milliseconds.[^11] Human investigators tackle only the red‑flag tier, slashing false positives like a bouncer waving VIPs through while stopping gate‑crashers.

Take‑home idea: High‑volume, rule‑heavy scenarios are perfect playgrounds—let agents handle the haystack so analysts can focus on the few needles.

Healthcare — The Always‑On Triage Nurse

A hospital network fed its lab‑scheduling agent EHR data and clinic calendars. When a doc orders bloodwork, the agent finds an open slot, notifies the patient, queues a phlebotomist, and updates insurance codes.[^6] Less phone‑tag, faster care, happier nurses.

Take‑home idea: Anywhere appointment ping‑pong saps staff energy, agents can be the friendly traffic cop keeping lanes clear.

Software — The Code Janitor Turned Coach

GitHub’s PR‑Agent sweeps through pull‑requests, labels risk, suggests changes, and—even braver—merges low‑stakes fixes on its own.[^2] Engineers return from lunch to find routine lint issues already resolved, freeing brainpower for thornier puzzles.

Take‑home idea: Development backlogs often hide “one‑line‑fix” dust bunnies. Let an agent vacuum those so humans can invent the Roomba 2.0.

Pattern spotting: Notice how each story pairs a repetitive bottleneck with an agent that’s tireless, context‑savvy, and unafraid of 3 a.m. alerts. In the roadmap section up next, you’ll learn how to pilot similar wins without blowing the budget.

11. Implementation Road‑Map

Launching an agent is a lot like planting a tree. You start with a seedling in a pot, move it to a sunny patch, and only later let it fend for itself in storms. Skip a stage and you get a wilted stick—or a fallen oak on your neighbour’s car. Use this three‑stage roadmap to nurture your first agent from houseplant to healthy forest.

Stage 1 – Seedling Pilot (0‑90 days)

Goal: Prove the soil isn’t toxic.

Scope: one crystal‑clear KPI, two data feeds max, read‑only credentials.

Playbook: Baseline the human‑only metric on Monday. Drop in the agent Tuesday. Compare on Friday. Keep verbose logs in case the seed sprouts mushrooms.

Graduation test: ≥ 20 % improvement or ≤ 5 % error rate—and zero guard‑rail breaches.

Stage 2 – Sunlight & Fencing (Month 3‑12)

Your sapling looks healthy; time for a bigger pot. Add write permissions, but hammer in a short fence (budget caps, rate limits). Canary releases catch bad pushes before they scorch the orchard. Set up agent‑ops runbooks: rollback scripts, health dashboards, post‑mortems (call them “prune‑mortems” if you love puns).

Stage 3 – Forest‑Ready Autonomy (Year 1 +)

Now the tree throws shade. Introduce auto‑grading harnesses that score every run. Feed insights back to fine‑tune the planner—like annual rings thickening the trunk. Add self‑healing: if a branch breaks (API 503), the agent reroutes and logs a repair ticket.

North‑Star metric: Keep mean‑time‑to‑detect (MTTD) and mean‑time‑to‑repair (MTTR) under 10 minutes. If storms roll in and your tree barely sways, you’ve earned bragging rights.

Quick checklist before you grab the watering can

Label the pot. Document goal, owner, and shut‑off switch.

Pick pruning shears. Decide who reviews logs and how often.

Set calendar reminders. Quarterly red‑team drills keep bugs (and beetles) away.

Mini‑exercise:

Draw a garden map of one workflow you’d love to automate. Mark the pilot pot, the fenced plot, and the final forest. List one risk to weed at each stage.

12. Tooling & Ecosystem Map

Think of building an agent like stocking a kitchen. You can hunt for ingredients at a farmers’ market (open‑source), subscribe to a meal‑kit service (cloud platforms), or buy a ready‑made frozen entrée (vertical SaaS). The right choice depends on how much chopping versus convenience you crave.

Farmers’ Market — Open‑Source Delights

Stalls overflow with fresh produce like LangChain, AutoGen, and Semantic Kernel. You pick only what you need, combine flavors freely, and pay mostly in elbow grease. Perfect for tinkerers who enjoy seasoning to taste and don’t mind washing extra dishes (a.k.a. writing glue code).

Meal‑Kit Subscription — Cloud PaaS

Too busy to dice onions? Google Vertex Agents and Microsoft Semantic Workbench ship pre‑portioned ingredients plus step‑by‑step cards. You still cook, but the platform handles shopping, refrigeration (scaling), and food safety (security patches). Great when you want creative control without owning a farm.

Frozen Entrée — Vertical SaaS

Need dinner in five minutes? Heat‑and‑eat platforms like UiPath Agent Builder and Salesforce Agentforce arrive fully sauced. Pop them into your existing microwave (workflow) and they serve specific cuisines—automation or CRM—beautifully. Speedy, but don’t expect to swap the béchamel for chimichurri.

Pro tip: Start at the farmers’ market for pilots (cheaper experiments), graduate to meal‑kits for scale, and sprinkle in frozen entrées when a department screams for instant relief.

13. Integrating Agents with RPA

Think of RPA bots as conveyor belts and agents as the clever robot arms that work beside them. The belts move items with perfect consistency; the arms make smart choices when an item arrives slightly off‑center.

Why pair them at all?

RPA excels at muscle memory. It knows exactly where the “Submit” button lives.

Agents excel at improvisation. They notice when the button moved or the form logic changed and adapt on the fly.

Together, they form an assembly line where RPA does the heavy lifting and the agent handles the “uh‑oh” moments that used to stall production.

The Handshake Pattern (Bot ↔ Agent ↔ Bot)

Bot kicks off the job. It scrapes an invoice PDF and parks the data in a staging table.

Agent reviews and decides. It spots a mismatch, consults policy memory, and writes a note: “Line‑item tax looks fishy. Should I adjust?”

Bot finishes the paperwork. If all is well—or after human approval—the bot posts the corrected invoice to the ERP and emails the vendor.

Guard‑rails for a smooth dance

Pass context, not essays. Use JSON payloads or workflow metadata; avoid stuffing the entire PDF into the agent’s prompt.

Short loops, fast fails. Keep bot tasks under 30 seconds. If the agent waits too long, it should trigger an alert instead of hanging.

Audit stamps on every baton pass. Log

who,what,when, andwhyeach time control shifts.

Quick start recipe (serves 1 department)

Pick one nagging exception flow—something bots kick out to humans daily.

Let the agent propose fixes but keep the bot in charge of final submission.

Measure time‑to‑resolution before vs. after. If you cut it in half, grab a slice of celebratory cake and scale to the next exception.

Next up: guard‑rails aren’t optional. Let’s unpack governance, safety, and ethics before your clever robot arm gets ideas about moving the whole conveyor belt.

14. Governance, Safety & Ethics

Think of deploying an agent like letting a self‑driving car onto city streets. You wouldn’t skip seatbelts, speed limits, or traffic lights. Governance is the rulebook—and the rumble strips—that keep your AI from veering into a fruit stand.

Seatbelts: The Autonomy Envelope

Set hard caps on budget, latency, recursion depth, and tool scope. If the agent tries to spend $501 when the limit is $500, the seatbelt locks. Express these rules in policy‑as‑code (Open Policy Agent, YAML files), so enforcement is automatic, not a forgotten sticky note.

Dashcam: Explainability Logs

Imagine every action stamped with a dashcam frame: goal → plan → tool_call → result → rationale. When auditors ask, “Why did the agent refund $123 at 2 a.m.?,” you rewind the footage and replay the run in minutes, not days.

Traffic Lights: Human Escalation Matrix

Green means “go” for tiny, low‑risk actions. Yellow demands a quick glance. Red forces a full stop until a human waves the flag. Define thresholds—“Transactions over $10k require manager approval”—so the car knows when to park.

Road Rules: Regulatory Alignment

Different cities, different speed limits. Map your deployment to ISO/IEC 42001, the EU AI Act, or NIST AI RMF depending on your jurisdiction. Sticker these rules on the windshield early; retro‑fitting compliance is like replacing brakes while barreling downhill.

Crash Tests: Red‑Team Drills

Once a quarter, play the mischievous pedestrian. Attempt prompt‑injection, data exfiltration, or API overload. Log what broke, patch, repeat. Better to dent the bumper in your test track than on Main Street.

Quick safety starter kit

Draft a one‑page autonomy envelope.

Turn on full‑packet logging before first prod run.

Publish a traffic‑light chart your team can recite without reading.

Book the first red‑team drill before the champagne launch.

With guard‑rails bolted on, we can talk about measuring performance—because seatbelts matter, but dashboards tell you if you’re winning the race.

15. Evaluation Metrics & Benchmarks

Metrics are like your agent’s report card. You wouldn’t ignore a failing grade, so don’t skip dashboards when your AI starts cooking. Here’s how to tell honor students from dropouts.

Task-success rate measures how often the agent actually finishes the goal versus getting tripped up. Aim for at least 85 %, so your agent isn’t spending more time troubleshooting than delivering value.

Tool-call precision is the share of tool usages that worked as expected—“Did my API call actually post the invoice?”—with a target north of 95 % to keep costs and security risks low.

Regret score tracks how many times the agent had to backtrack and undo actions. Think of it as your agent’s “oops” counter: keep it under 0.1 per run to ensure it learns efficiently, not loops endlessly.

Latency (P95) captures the slowest 5 % of runs. If your agent is racing a human, you want that top-performer to finish at most as fast as a person—ideally faster.

Cost per run tallies tokens, compute, and tooling expenses. Watch for a 30 % YoY reduction as you optimize and scale. A shrinking cost-per-run means your system is learning smarter, not just burning budget.

Public benchmarks like AgentBench v2 and GAIA let you compare your grades against peer institutions. Run weekly checks so you spot grade inflation—or a surprise F—before investors ask.

16. Workforce Impact & Change Management

Imagine your office as a bustling kitchen. When the sous-chef (agent) starts flipping omelettes, the head chef (human) stops cracking eggs and starts perfecting the menu. Agents don’t replace people; they move them to higher-value work.

At first, only 9 % of tasks vanish—simple chores like manually reconciling spreadsheets get handed over. Every other task morphs: humans shift from clicking buttons to overseeing, verifying, and refining what the agent does. It’s like the head chef tasting each dish instead of washing pans.

New roles on the brigade

Agent Ops Engineer: Designs the recipe workflow, monitors the stove (pipelines), and tweaks oven settings (guard-rails).

Prompt Engineer / Conversation Designer: Crafts the chef’s instructions—clear, unambiguous recipes that agents follow.

Domain Verifier: Brings expert taste tests—reviewing any high-stakes output before it hits the dining room.

Stirring change smoothly

Map your kitchen. List who does what today, then draw arrows showing how tasks shift to agents.

Train the chefs. Build 30-60-90 learning plans: agent-debug tutorials, policy cookbooks, and emergency off-ramp drills.

Shadow shifts. Let humans sit next to agents for a week—review every move until trust grows.

17. Case Studies & Success Stories

Real proof in the pudding—four stories of agents delivering measurable impact. These aren’t pilot whims; they’re deployed systems moving needles across industries.

NVIDIA Robotics: Imagine a warehouse as a giant library, each tote a book waiting to be picked. NVIDIA deployed mobile robots with agentic brains to navigate aisles, scan barcodes, and deliver totes to packers. The result? 35 % fewer picking errors in a Boston pilot[^16]—equivalent to reducing mis-shelved books by thousands per week.

Microsoft Copilot for Finance: In another finance department, controllers paired an L3 operator agent with their ERP. The agent vetted batches of journal entries, posted approved entries overnight, and flagged anomalies. The payoff: 27 % faster month-end close[^8], slashing overtime and giving accountants back precious evenings.

GitHub PR-Agent: Software teams face a traffic jam of pull-requests. GitHub’s agent triages PRs, runs basic tests, suggests improvements, and merges low-risk fixes under human-set thresholds. Adoption across enterprise clients drove a 40 % increase in merged fixes per sprint[^2], turning backlog bottlenecks into smooth code pipelines.

Aisera IT Help Desk: IT support tickets are like spam reaching an inbox—most get deleted, some demand answers. Aisera’s Slack-based agent triages common requests (password resets, access issues), resolves 60 % of tickets end-to-end[^9], and escalates complex cases to human engineers. The net: dramatically lighter queues and a calmer help-desk shift.

Common thread: each case starts with a high-volume, repeatable task—picking, posting, pulling, or replying—and overlays autonomous decision-making. Next section digs into pitfalls and fixes to keep your own story from derailing.

18. Common Pitfalls & Fixes

Even the best chefs burn a dish now and then. Here are three common missteps when deploying agents—and the secret sauces to fix them.

Pitfall: Over-automation

What happens: You give your agent too much freedom, and it starts handling exotic edge cases—like your intern trying to bake soufflés before mastering omelettes. Suddenly, errors skyrocket.

Fix: Start at Level 1 (Assistant). Only after the agent reliably nails simple tasks (≥ 90 % success) do you promote it. Treat each level like a cooking lesson; master one recipe before moving on.

Pitfall: Excessive privileges

What happens: The agent has the keys to the kingdom, and one bad tool call deletes production data.

Fix: Enforce least-privilege tokens. Think of each API credential as a kitchen knife: give only the butter knife until you’re sure they can handle a chef’s knife. Rotate and audit credentials weekly.

Pitfall: Data leakage

What happens: You feed the agent sensitive PII in prompts, and logs spill customer data across your dev environment.

Fix: Mask or tokenize PII before it hits the agent. Redact logs and backups like you’d shred old receipts—so nobody can piece together personal details.

Quick reminder: Every pitfall is an opportunity to tighten guard-rails, refine policies, and train your team on best practices. A few precautionary tweaks now save a world of headaches later.

19. Future Horizons

Peek into the crystal ball—what’s on the horizon for agents? These emerging trends aren’t sci‑fi; they’re pilot programs bubbling up in labs and press releases.

Multi‑modal agents—eyes meet brains. Imagine an agent that sees a factory floor, reads the floor plan, and plans maintenance, then acts by dispatching a drone to inspect a conveyor belt. Vision + language models team up for richer, context‑aware decisions. Expect medical triage agents reading X‑rays and chart notes together by 2026.

Decentralised swarms—the hive mind. Instead of one mega‑agent, dozens of micro‑agents coordinate peer‑to‑peer—like a swarm of bees scouting flowers. They’ll trade data directly over secure channels, handing off tasks seamlessly without a central server. Ideal for edge computing scenarios where latency is life.

Self‑evaluating loops—the agent audit committee. Forget manual reports; agents will run monthly health checks on each other, grading performance, spotting drift, and proposing retraining. Think of a QA pipeline where the testers also write the test cases and fix the bugs.

Emotion‑aware agents—the gentle concierge. Early R&D is blending sentiment analysis with agentic loops, so customer‑service bots detect frustration in tone, pause automated replies, and hand off to a human before anger escalates.

Takeaway: The road ahead looks less like a single highway and more like a network of intelligent pathways—agents that see, talk, self‑critique, and team up. Bookmark these trends and revisit every quarter

20. FAQ & Further Resources

You’ve got questions—let’s answer them like a friendly barista handing over your favorite brew. Quick sips of clear info before you head back to the grind.

Is agentic AI the same as AGI?

Short take: No. Agentic AI is like a clever sous-chef trained on specific recipes (tasks). AGI would be a Michelin-star chef who invents new cuisines on the fly—still the stuff of dreams.

How do I prove ROI?

Short take: Measure the before-and-after deltas on one key metric—cycle time, error rate, volume processed. A simple “seconds saved per run × runs per day” formula gets CFO buy-in fast.

Where can I try it today?

Short take: Sign up for free tiers: Google Vertex Agents Playground for PaaS experiments; Microsoft Semantic Workbench for end-to-end pipelines; and LangChain GitHub repo for DIY labs.

What if I need help?

Short take: Join vibrant communities: the ai-agent Discord server, #agents on Stack Overflow, and vendor forums (UiPath, Salesforce).

What pitfalls should I avoid?

Short take: Don’t skip pilot stages, least-privilege auth, or audit logging—those are your guard-rails, not optional add-ons.

Final sip: Bookmark this guide, print out the quick checklists, and bring it to your next team sync. With the right blend of caution and curiosity, your first agent can brew big wins, no barista apron required.

21. Thank you for sticking around

If you’ve made it this far, congratulations, you’re officially agentic-curious (and we love that for you).

This wasn’t a short scroll. You’ve wandered through decision loops, metaphorical kitchens, robo-warehouses, audit logs, and vision-enhanced swarms. You now know more than 98% of LinkedIn about agentic AI — but the real win? You’re thinking like an agent already. Sensing, planning, acting… exploring.

If you enjoyed the ride, here’s how to keep the momentum going:

🎧 Subscribe on Spotify or YouTube for weekly breakdowns and conversations where we unpack the future without the jargon.

📝 Browse the rest of our site — We’ve got more posts like this, minus the long scroll and plus fresh analogies.

Thank you again for reading. Now go build something delightfully autonomous, or at least, tell your favorite human about it.

22. Sources

https://cloud.google.com/vertex-ai/generative-ai/docs/agent-development-kit/quickstart

https://www.techtarget.com/searchenterpriseai/definition/agentic-AI

https://www.voguebusiness.com/story/technology/whats-agentic-ai-and-what-should-brands-know-about-it

https://www.uipath.com/blog/product-and-updates/new-era-agentic-automation-begins-today

https://hbr.org/2024/12/what-is-agentic-ai-and-how-will-it-change-work

https://www.nvidia.com/en-us/about-nvidia/ai-robotics-warehouse-pilot-press-release/