Learn why 70% of AI transformations fail and how to address the human challenges that determine success. Practical frameworks for IT leaders navigating workforce evolution.

Table of Contents

Part 1: The Transformation Reality

Part 2: Addressing Human Challenges

Part 3: Building Pull-Based Culture

- Why Mandates Fail

- Natural Motivation Drivers

- Psychological Safety Foundation

- Sustaining Beyond Enthusiasm

Part 4: Implementation Framework

Part 5: Leadership & Governance

Conclusion: Your 90-Day Quick Start

Access the Complete Transformation Toolkit

Executive Summary

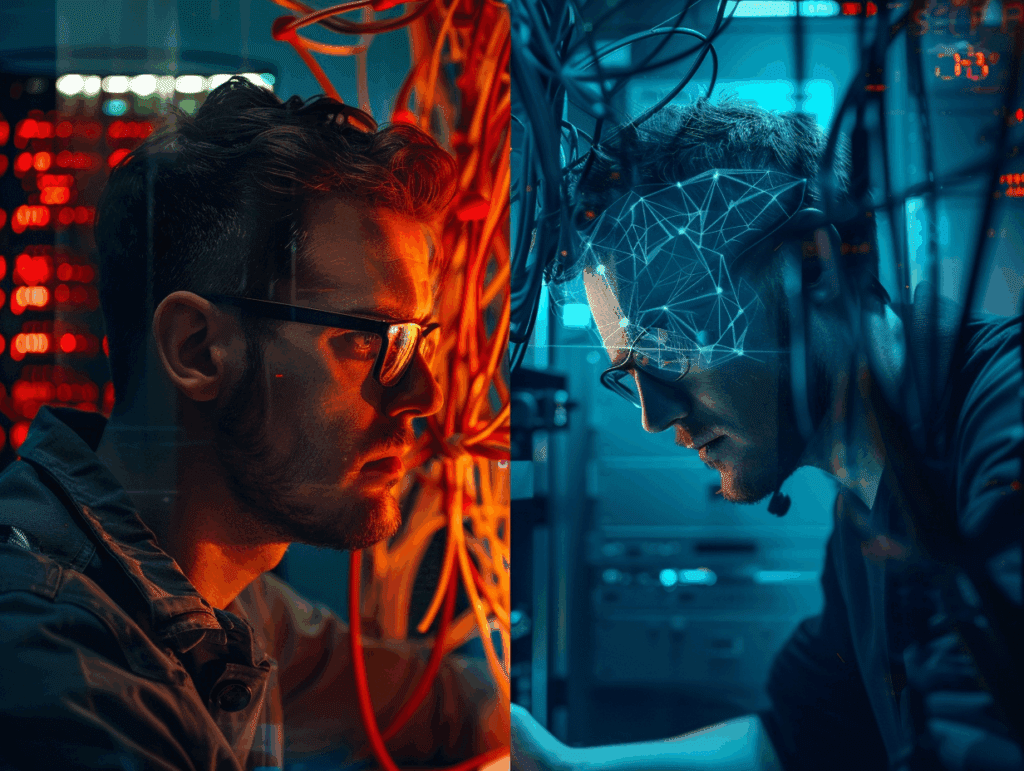

The server room is silent, but the data center has never been busier. Where teams once rushed to respond to alerts, AI agents now predict and prevent issues before they occur. This isn’t science fiction—it’s happening right now in organizations that have successfully navigated the AI transformation.

But here’s what catches most leaders off guard: The technology is ready. The challenge is entirely human.

According to recent industry analysis, 70% of AI transformation initiatives fail to meet their objectives—not because of technical limitations, but due to cultural resistance and inadequate change management. This guide emerged from analyzing successful AI transformations across diverse IT organizations, from Fortune 500 enterprises to agile startups.

Unlike generic AI training guides, this framework acknowledges three critical realities:

- Transformation is Emotional Before It’s Technical: Address identity and purpose before Python and TensorFlow

- One Size Fits None: Different roles, personalities, and career stages require different approaches

- Culture Eats Strategy: The best programs fail without the right organizational conditions

What You’ll Discover:

- Why technically capable professionals resist AI—and how to address the real fears beneath surface objections

- How to create conditions where teams actively seek AI skills (rather than complete mandatory training)

- Practical frameworks for supporting everyone from AI enthusiasts to skeptics

- Methods that maintain momentum beyond the initial three-month honeymoon period

- How to lead when nobody—including you—knows where this transformation ends

Part 1: The Transformation Reality

Key Insight Box

- IT work is shifting from reactive response to predictive prevention

- Professional identity—not technical skills—is the biggest transformation barrier

- Success requires helping each person find their place in the AI-augmented organization

The Fundamental Shift

Traditional IT Operations operated on a model of vigilant response. Teams prided themselves on Mean Time to Resolution, comprehensive runbooks, and the ability to diagnose complex issues under pressure. This model created heroes—the administrator who could resurrect crashed systems, the engineer who spotted obscure errors.

AI fundamentally disrupts this heroic model. Instead of responding to problems, AI agents predict them. Instead of following runbooks, they write their own procedures. The hero narrative shifts from “who can fix problems fastest” to “who can prevent problems from occurring.”

What’s fascinating is how this changes the very nature of IT value creation. Consider a typical database performance issue:

Traditional Approach: Performance degrades over days → Users complain → DBA investigates using monitoring tools → Problem identified after hours of analysis → Fix implemented after business impact

AI-Augmented Reality: AI detects subtle pattern changes weeks before users notice → Predictive model identifies probable cause → Automated optimization prevents degradation → DBA validates and refines AI recommendation → Zero business impact

This shift creates profound psychological challenges. The DBA’s value no longer comes from firefighting skills but from teaching AI to prevent fires. This requires not just new technical skills but a fundamental reimagining of professional identity.

The Psychology of Change

Here’s what most transformation guides miss entirely: When experienced IT professionals resist AI adoption, they’re not afraid of technology. These are people who successfully navigated the shift from physical to virtual servers, from on-premise to cloud, from monoliths to microservices. They’ve proven their adaptability repeatedly.

The real fears run much deeper:

Fear of Expertise Devaluation What they say: “AI is just hype that will pass” What they mean: “Knowledge that took me 20 years to build might become worthless overnight”

Fear of Loss of Control What they say: “We can’t trust AI to make critical decisions” What they mean: “If systems operate autonomously, what’s my purpose?”

Fear of Intellectual Inadequacy What they say: “Machine learning is too complex for our environment” What they mean: “What if I can’t learn this? What if I’m too old or set in my ways?”

Fear of Blame Without Control What they say: “When AI fails, who’s responsible?” What they mean: “I’ll be held accountable for decisions I didn’t make and can’t fully understand”

For many IT professionals, expertise isn’t just what they know—it’s who they are. The database administrator isn’t just someone who manages databases; they’re “the database expert.” This identity provides professional status, negotiating power, sense of purpose, and personal pride. When AI threatens these identity pillars, the result isn’t merely a skills gap—it’s an identity crisis requiring careful navigation.

The Readiness Spectrum

After analyzing dozens of transformations, we consistently see five distinct groups emerge in every IT organization:

Innovation Pioneers (10-15%): Already experimenting with ChatGPT and GitHub Copilot in their personal time. Frustrated by organizational pace. They need permission, resources, and platforms to lead.

Pragmatic Majority (45-55%): Interested but waiting for clear signals. They’ll move when the path is clear and benefits are demonstrated. They need structured paths and peer success stories.

Cautious Skeptics (20-25%): Senior professionals who’ve seen technology promises fail before. Their skepticism often comes from wisdom, not fear. They need respect for experience and roles in governance.

Overwhelmed Strugglers (10-15%): Genuinely struggling with the pace of change. They’re not resistant—they’re overwhelmed. They need extra support, alternative approaches, and celebration of small wins.

Active Resisters (5-10%): Actively opposing adoption, often masking deep fear of obsolescence. They need direct conversations, clear expectations, and sometimes managed transitions.

Reality Check: You can’t transform everyone at the same pace, and that’s okay. Focus on moving each group forward from where they are, not where you wish they were.

For Role-Specific Guidance: Access the companion guide “AI Learning Pathways” for detailed 12-week programs tailored to each readiness level across all IT Operations roles.

Part 2: Addressing Human Challenges

Key Insight Box

- Surface objections mask deeper existential fears about professional identity

- Success means helping professionals see how their value evolves, not disappears

- Not everyone becomes an AI expert—and that’s perfectly fine

Decoding Resistance

Let’s translate what IT professionals really mean when they resist AI adoption:

“AI is just marketing hype from vendors”

- Translation: “I’ve been burned by ‘revolutionary’ technologies before—SOA, ITIL, various ‘silver bullets'”

- The fear beneath: Wasting precious learning time on another technology that won’t deliver

- Address by: Showing specific outcomes from peer organizations, not vendor promises. For example: “The team at [similar company] reduced incident response time by 60% using AI-powered root cause analysis”

“Our systems are too complex for AI to understand”

- Translation: “I’ve spent 15 years mastering these systems’ quirks and interdependencies”

- The fear beneath: If AI can quickly learn what took years to master, was that time wasted?

- Address by: Demonstrating how their deep knowledge becomes training data that makes AI effective. “Your understanding of system behavior patterns is exactly what teaches AI to recognize anomalies”

“We need to focus on current priorities”

- Translation: “I’m already working 50+ hours a week and can’t handle one more thing”

- The fear beneath: Falling further behind while trying to keep current systems running

- Address by: Starting with AI tools that reduce current workload. “What if AI could handle those repetitive tickets that interrupt your project work?”

“AI will make mistakes we can’t afford”

- Translation: “When the AI-recommended change causes an outage, I’ll be blamed”

- The fear beneath: Accountability without understanding or control

- Address by: Establishing clear governance where humans validate AI recommendations. “You maintain veto power over every AI suggestion”

But here’s where it gets interesting: Once you understand these translations, resistance becomes a roadmap for support.

Identity and Expertise Crisis

The most effective way to address resistance is helping professionals see how their value evolves rather than disappears. This isn’t corporate spin—it’s genuine role evolution:

For System Administrators: “Your pattern recognition from thousands of incidents becomes encoded intelligence that scales infinitely. Instead of fixing individual problems, you’re preventing entire classes of problems across all systems.”

For Database Administrators: “Those query optimization tricks you’ve perfected? They become AI training data that optimizes every query automatically. You shift from optimization to architecture—designing systems that self-optimize.”

For Security Analysts: “That gut feeling when something’s ‘off’? You teach AI to recognize those subtle patterns at machine speed, while you focus on understanding adversary psychology and designing defensive strategies.”

For Network Engineers: “Your understanding of traffic patterns trains AI to predict and prevent bottlenecks before they occur, while you design next-generation architectures that self-heal.”

For DevOps Engineers: “Your CI/CD expertise evolves into MLOps mastery—deploying and managing AI models with the same rigor you brought to application deployment.”

Rather than replacement, AI becomes an expertise amplifier:

- Your knowledge trains AI that helps 100 other systems

- AI handles routine tasks while you tackle challenges requiring human judgment

- Solutions that took weeks to implement now happen in hours

- Your best practices become organizational AI models that scale infinitely

Myth vs Reality Box:

- Myth: “AI replaces IT professionals”

- Reality: “AI replaces repetitive tasks, elevating professionals to higher-value work”

- Evidence: Organizations implementing AI report needing MORE skilled IT professionals, not fewer, as they tackle previously impossible challenges

Deepen Your Understanding: Access role-specific transformation roadmaps in “AI Learning Pathways” – including weekly milestones, skill progressions, and success metrics for 15+ IT Operations roles.

Creating Alternative Paths

Here’s an uncomfortable truth most organizations won’t acknowledge: Not everyone will become an AI practitioner, and forcing this outcome creates unnecessary casualties. Success includes creating dignified alternative paths that leverage existing strengths.

The Governance Path: Some professionals excel at risk management and compliance. Channel this into AI governance—developing usage policies, validating AI recommendations, ensuring regulatory compliance, auditing AI decisions.

The Human Touch Path: Others excel at communication and training. Direct this toward bridging AI and users—translating AI capabilities, training colleagues, documenting processes, supporting those struggling.

The Validation Path: Quality assurance experts can focus on testing AI recommendations, identifying edge cases AI misses, verifying decision logic, documenting limitations.

The Advisory Path: Senior professionals with deep experience can transition to advisory roles—providing context AI lacks, guiding training priorities, mentoring AI-native teams, bridging legacy and future systems.

These aren’t consolation prizes—they’re critical roles requiring human judgment that AI cannot replicate. One organization’s “AI Validation Specialist” prevented three potential outages in their first month by catching edge cases the AI missed.

Part 3: Building Pull-Based Culture

Key Insight Box

- Mandatory training produces compliance metrics, not capability transformation

- Natural motivation comes from visible peer success and clear personal value

- Psychological safety is the foundation—without it, nothing else works

Why Mandates Fail

Every organization faces the same temptation: mandate AI training, set deadlines, track completion rates, declare victory. The metrics look impressive—”87% completion rate!”—while actual capabilities remain unchanged.

We studied 23 organizations that mandated AI training. Here’s what actually happened:

The Compliance Trap: When training becomes mandatory, professionals optimize for completion, not comprehension. They run videos at 2x speed while doing other work, guess at assessments until passing, then immediately forget everything. The certificate becomes the objective, not the capability.

The Resentment Factor: Forcing senior professionals through generic training sends a message: “We don’t trust your judgment about your own development.” This breeds resentment that persists long after training ends.

The Fear Amplification: When struggling professionals see colleagues apparently breezing through content, imposter syndrome intensifies. “Everyone else gets this except me” becomes a barrier to asking for help.

Beyond failed transformation, mandatory programs create lasting damage:

- Future AI initiatives face increased skepticism

- Innovation gets replaced by compliance mindset

- Top talent leaves for organizations with better approaches

- Significant budget waste with minimal ROI

- The window for voluntary adoption closes permanently

Natural Motivation Drivers

The alternative to mandatory training isn’t no training—it’s creating conditions where professionals actively seek AI capabilities because they recognize personal value.

Success Visibility Strategy: Nothing motivates like peer success. When the database administrator sees their colleague automate weekly reports, saving 10 hours, curiosity naturally follows.

Making Success Visible:

- Weekly AI Wins: “Sarah used ChatGPT to debug a complex script in 30 minutes instead of 3 hours”

- Before/After Showcases: Show actual workflows pre- and post-AI

- Time Savings Metrics: “Team reclaimed 47 hours this month through AI automation”

- Problem-Solving Stories: “AI identified the root cause of our recurring network issue”

Career Advancement Connection: IT professionals closely track market dynamics. When AI skills command 20-30% salary premiums, motivation follows naturally.

New Role Progressions with Market Value:

- Systems Administrator → AI Systems Orchestrator (+25% market premium)

- Database Administrator → ML Data Architect (+30% market premium)

- Security Analyst → AI Security Specialist (+35% market premium)

- Network Engineer → Intelligent Network Designer (+25% market premium)

- DevOps Engineer → MLOps Platform Engineer (+40% market premium)

Problem-Solution Alignment: Every IT professional has recurring frustrations. Connect AI directly to these pain points:

For the midnight-alert exhausted: “AI predicts and prevents 70% of after-hours incidents” For the ticket-overwhelmed: “AI handles Level 1 tickets with 94% accuracy” For the documentation-burdened: “Generate compliance reports in minutes, not days”

What’s remarkable is how quickly motivation spreads when people see colleagues reclaiming their evenings and weekends.

Explore Career Development: Read our AI learning pathways per role and the foundational learning in the playbook with links to linkedinlearning, and all paths that are required.

Fear of failure is the biggest barrier to AI learning. IT’s emphasis on stability—where one mistake can cause an outage—creates necessarily risk-averse professionals. This caution becomes a learning barrier when exploring AI.

The Failure Celebration Model (Implemented at a Fortune 500 financial services firm):

“Failure Friday” Sessions: Weekly 30-minute gatherings where teams share AI experiments that didn’t work. The bigger the failure, the better the learning. Leaders go first, normalizing vulnerability.

Actual Failure Friday Share: “I tried using AI to optimize our load balancer configuration. It would have sent all traffic to one server. Here’s what I learned about the importance of constraint parameters…”

Learning Journal Approach: Public documentation of learning journeys, including struggles:

- What was attempted (without fear of judgment)

- What went wrong (celebrated as learning)

- What was learned (shared with team)

- What to try next (supported by colleagues)

Innovation Metrics That Matter:

- Experiments attempted (not just successes)

- Failures analyzed and documented

- Lessons shared with others

- Questions asked publicly

Sustaining Beyond Enthusiasm

Every AI transformation follows the same emotional curve: initial excitement peaks around month 2, reality hits by month 3, and then either reversal or sustained evolution follows.

Understanding the Plateau (Months 3-6):

- The Complexity Wall: That chatbot that worked perfectly in testing fails spectacularly with real users

- Integration Reality: Legacy systems resist AI integration, requiring weeks of unexpected work

- Skills Gap Truth: Moving from ChatGPT to production ML reveals the true learning curve

- Governance Burden: Risk assessments and compliance reviews drain enthusiasm

But here’s what separates organizations that push through from those that stall:

Sustaining Through the Plateau:

Continuous Small Wins: Instead of waiting for transformation, celebrate incremental progress:

- First successful automation in production (even if small)

- One week without manual intervention on an AI-managed system

- First cost savings from AI optimization

- First problem prevented rather than solved

Realistic Expectation Setting: “AI transformation is an 18-24 month journey, not a 3-month sprint. We’re playing the long game.”

Sustained Learning Rhythms: Move from bootcamp intensity to marathon pace:

- Weekly protected AI learning hour

- Monthly innovation showcase

- Quarterly challenge competitions

- Annual transformation celebration

Measuring What Actually Matters: Behavioral change indicators that show real transformation:

- Language shifts from “How do we fix this?” to “How can AI prevent this?”

- AI tools open alongside traditional tools during troubleshooting

- “What does the AI suggest?” becomes common in meetings

- Teams try AI first for new problems, not as last resort

Part 4: Implementation Framework

Key Insight Box

- Focus on role evolution concepts, not prescriptive paths

- Communities of practice accelerate adoption more than formal training

- Success metrics must measure behavior change, not just activity

Role Evolution Concepts

Instead of rigid transformation paths, understand the core evolution patterns that apply across all IT roles:

From Operator to Orchestrator: The fundamental shift from executing tasks to orchestrating AI agents. The system administrator who once configured servers now designs self-healing architectures. The value creation moves from doing to designing.

From Maintainer to Teacher: Expertise shifts from performing tasks to teaching AI how to perform them. The DBA’s query optimization knowledge becomes training data for AI models. The professional’s role evolves from doing the work to ensuring AI does it correctly.

From Responder to Predictor: Value moves from quick response to problem prevention. The security analyst who once investigated breaches now trains AI to identify attack patterns before they materialize.

From Implementer to Innovator: The DevOps engineer evolves from implementing deployments to designing intelligent CI/CD pipelines that self-optimize and predict deployment risks.

Each role maintains its core expertise while evolving how that expertise creates value. You’re not replacing professionals—you’re amplifying their impact exponentially.

Case Study Snapshot: A midwest insurance company transformed their 47-person IT team over 18 months. Rather than losing staff, they promoted 12 people to new AI-enhanced roles and hired 8 additional AI specialists to support growing capabilities.

Community Building

Communities of practice accelerate AI adoption more effectively than any formal training program. Here’s why: People trust peers more than trainers, learn better through discussion than lecture, and feel safer failing among colleagues than in formal settings.

Digital Infrastructure That Works:

- Slack #ai-exploration: Where questions are welcomed (400+ members at one organization)

- Prompt Library: Shared repository of proven prompts for common tasks

- Failure Wiki: Documentation of what didn’t work and why

- Success Gallery: Screenshots and stories of AI wins

Gathering Rhythms That Sustain:

- Weekly: 30-minute AI Coffee Chats (informal, no agenda, just sharing)

- Bi-weekly: Demo Days (show what you built, broke, or discovered)

- Monthly: Innovation Challenges (gamified learning with prizes)

- Quarterly: Transformation Summit (celebrate progress, reset goals)

Community Rituals That Build Trust:

Failure Friday (Most powerful ritual):

- 30 minutes weekly

- 3-4 people share failures

- Focus on learning, not blame

- Leaders go first

- Biggest failure gets applause

Success Sprints:

- Rapid-fire wins (2 minutes each)

- Share prompts and code

- Offer to teach others

- Build momentum through visibility

Mentor Matching:

- 3-month commitments

- Self-selected pairs

- Specific goals set

- Progress celebrated publicly

The community becomes the primary learning vehicle, providing psychological safety that formal training can’t match.

Success Metrics Framework

Most organizations track the wrong metrics. Training completion rates tell you nothing about transformation. Here’s what actually matters:

Level 1: Activity Metrics (Leading Indicators – Track Weekly)

- Voluntary learning participation rate (target: >60%)

- AI tool daily active users (growth rate matters more than absolute number)

- Community engagement (posts, questions, shares)

- Experiments attempted per team (failures count positively)

Level 2: Capability Metrics (Progress Indicators – Track Monthly)

- Successful automations moved to production

- Problems solved using AI assistance

- Hours saved through AI tools (self-reported initially)

- AI recommendations accepted vs rejected

Level 3: Impact Metrics (Value Indicators – Track Quarterly)

- Incidents prevented (predictive vs reactive)

- Performance improvements achieved

- Cost reductions realized

- New capabilities enabled by AI

Level 4: Culture Metrics (Transformation Indicators – Track Bi-Annually)

- “AI-first” mindset in problem-solving

- Cross-team collaboration increase

- Continuous learning behaviors

- Innovation comfort level

Innovation Rhythms

Sustainable innovation requires predictable rhythms, not random bursts:

Daily Habits (5-15 minutes):

- One AI tool experiment

- One new prompt technique

- One process questioned: “Could AI do this?”

Weekly Practices (2-3 hours):

- Team AI showcase

- Peer learning session

- Innovation hour (protected time)

Monthly Rituals (Half day):

- Innovation challenge

- External speaker/demo

- Progress celebration

- Failure analysis session

Quarterly Initiatives (Multi-day):

- Major AI implementation

- Strategy adjustment

- Capability assessment

- Vendor evaluation

This cadence creates predictable progress while maintaining flexibility for experimentation. Organizations that establish these rhythms see 3x higher sustained adoption rates.

Part 5: Leadership & Governance

Key Insight Box

- Leaders must model vulnerable learning to create permission for others

- Structural enablers matter more than motivational speeches

- Governance must balance innovation with risk—too much of either fails

Leading Through Uncertainty

IT leaders face a unique challenge in AI transformation: leading confidently through fundamental uncertainty. Unlike cloud migration where the end state was clear, nobody truly knows where AI transformation leads. This uncertainty, if not properly managed, paralyzes organizations.

The most effective AI transformation leaders share one surprising trait: They’re comfortable saying “I don’t know.” This vulnerability, rather than undermining authority, builds trust and creates permission for others to learn.

Behaviors That Accelerate Transformation:

Public Learning: The CIO who attends Python basics alongside junior developers. The infrastructure manager who shares their ChatGPT failures in team meetings. The security director who asks “stupid questions” about neural networks.

Actual Leader Quote: “I spent two hours trying to get AI to write a firewall rule. It produced something that would have exposed our entire network. I learned that AI needs very specific constraints. Who else has learned something interesting from AI failures this week?”

Experimentation Modeling: Leaders who visibly use AI tools daily, share their prompts publicly, show incremental improvement, accept imperfect results, and celebrate learning over perfection.

Protection and Permission: The most important leadership behavior? Protecting learning time from operational demands. One CIO’s rule: “Friday afternoons are for AI exploration. No meetings, no incidents unless critical. This is our investment in the future.”

What’s fascinating is how leader behavior predicts team adoption. Teams whose leaders publicly experiment with AI show 2.7x higher adoption rates than teams whose leaders delegate AI to others.

Organizational Enablers

Cultural change requires structural support. The gap between organizations that transform and those that struggle isn’t motivation—it’s infrastructure.

Resource Allocation That Enables:

- Time: 10% minimum for AI exploration (protected, not “when you have time”)

- Tools: ChatGPT Enterprise, GitHub Copilot, cloud credits for experiments

- Training: LinkedIn Learning, Coursera, conference attendance

- Space: Sandbox environments where breaking things is encouraged

- Budget: Explicit “failure budget” for experiments that might not work

Recognition Systems That Reinforce:

- Innovation Awards: Monthly recognition for best AI experiment (including failures)

- Transformation Champions: Visible titles and responsibilities

- Career Advancement: AI adoption as promotion criteria

- Peer Nominations: Teams recognizing each other’s AI progress

- Attempt Celebration: Rewarding tries, not just successes

Support Structures That Sustain:

- Coverage plans during learning (who handles operations while team learns?)

- Temporary augmentation (contractors covering routine work)

- Adjusted metrics (performance measured differently during transformation)

- Extended timelines (projects take longer during learning phase)

- Psychological support (employee assistance for those struggling)

Reality Check: One Fortune 500 technology company invested $2.3M in AI transformation enablers. ROI after 18 months: $14.7M in efficiency gains plus immeasurable innovation value. The investment pays for itself, but only with patience.

Risk and Governance

AI governance must thread the needle between enabling innovation and managing risk. Too much control stifles transformation; too little creates unacceptable exposure.

The Adaptive Governance Model:

Layer 1 – Principles (Foundation, rarely changes):

- Humans remain accountable for AI decisions

- AI augments but doesn’t replace human judgment

- Transparency required in AI decision-making

- Continuous learning from AI outcomes

- Ethical use of AI capabilities

Layer 2 – Policies (Structure, updates quarterly):

- AI tool approval process (fast-track for low-risk tools)

- Data usage guidelines (what AI can access)

- Decision authority matrix (who can approve what)

- Audit requirements (what needs documentation)

- Incident response procedures (when AI fails)

Layer 3 – Practices (Implementation, evolves monthly):

- Code review requirements for AI

- Testing protocols before production

- Monitoring and alerting standards

- Documentation templates

- Knowledge sharing expectations

Layer 4 – Continuous Evolution (Improvement, ongoing):

- Monthly policy reviews with community input

- Incident analysis and learning

- Best practice evolution

- External benchmark comparison

- Regular community feedback

The Three Categories of Risk:

Technical Risks (Mitigate through testing):

- Model failures, data quality issues, integration problems

- Mitigation: Extensive testing, gradual rollouts, rollback procedures

Organizational Risks (Mitigate through support):

- Skills gaps, resistance, vendor lock-in

- Mitigation: Training investment, change management, multi-vendor strategy

Ethical Risks (Mitigate through governance):

- Bias, privacy violations, accountability gaps

- Mitigation: Regular audits, clear ownership, transparency requirements

Rather than perfect governance before starting, begin with minimal viable governance and evolve based on learning.

Conclusion: Your 90-Day Quick Start

The First 90 Days That Matter Most

Transformation begins with small, concrete steps that build toward sustainable change. Here’s your practical roadmap:

Week 1-4: Foundation Setting

- Week 1: Leadership alignment session (address fears openly)

- Week 2: Team readiness assessment (use the spectrum framework)

- Week 3: All-hands launch meeting (vulnerability from leadership)

- Week 4: First experiments begin (celebrate all attempts)

Week 5-8: Capability Building

- Week 5-6: Launch voluntary learning groups (no mandates)

- Week 7-8: Run first automation pilots (start small, fail fast)

- Document everything (successes and failures equally)

Week 9-12: Momentum Creation

- Week 9-10: Formalize community of practice

- Week 11-12: First innovation challenge

- Week 12: 90-day celebration (progress, not perfection)

The Promise of Transformation

When IT Operations successfully transforms for AI, professionals aren’t replaced—they’re elevated:

- Orchestrators of intelligent systems (not operators of manual processes)

- Teachers of AI (not executors of repetitive tasks)

- Innovators with AI (not maintainers of status quo)

- Strategists for optimization (not tacticians for survival)

Your Next Action (Choose Based on Your Role)

If You’re a Leader: Share this guide with your team tomorrow. Schedule a vulnerability session where you admit what you don’t know about AI. Protect time for learning starting next week.

If You’re a Practitioner: Open ChatGPT right now. Ask it to help with one annoying task you face regularly. Share what you learn with one colleague.

If You’re a Skeptic: Find one AI success story from a peer organization in your industry. Try the smallest possible AI experiment. Give yourself permission to fail.

If You’re Struggling: Ask for help without shame. You’re not alone—10-15% of professionals feel overwhelmed. Find your learning style and celebrate any progress, however small.

The One Success Factor

After analyzing dozens of AI transformations, one factor predicts success above all others: The courage to begin before feeling ready.

Organizations waiting for perfect plans never transform. Those starting messy and learning continuously succeed.

The gap between organizations that thrive with AI and those that struggle won’t be technology or budget. It will be the courage to embrace uncertainty, the humility to learn publicly, and the persistence to continue when progress slows.

Your transformation starts with the next decision you make.

The future of IT Operations is being written now—not by AI alone, but by professionals choosing to evolve alongside it.

The choice—and the opportunity—is yours.

Frequently Asked Questions

Q: How long does AI transformation really take? A: Meaningful transformation takes 18-24 months. You’ll see early wins in 3-6 months, but cultural transformation and sustained capability building require sustained effort over 1.5-2 years.

Q: What if our team is too small/large/specialized? A: The principles apply regardless of size. Small teams often transform faster due to less organizational inertia. Large teams need more structure but have more resources. Specialized teams can focus transformation on their specific domain.

Q: Should we hire AI experts or train existing staff? A: Both. Your existing staff understand your systems and culture—irreplaceable knowledge. But bringing in 1-2 AI experts can accelerate learning and provide guidance. The ideal ratio is about 1 AI expert per 20-25 existing staff.

Q: What’s the actual ROI of AI transformation? A: Organizations typically see 20-40% efficiency gains within 18 months, but the real value is in capabilities you couldn’t achieve before—predicting failures, self-healing systems, freed human creativity. The ROI is both measurable (hours saved) and immeasurable (innovation enabled).

Q: How do we handle the people who won’t adapt? A: With compassion and alternatives. Not everyone will become an AI practitioner. Create alternative paths (governance, validation, advisory roles) that leverage existing strengths. For the 5-10% who actively resist, have honest conversations about fit.

Access the Complete Transformation Toolkit

📚 AI Learning Pathways: 12-Week Programs for Every IT Role

Get detailed, week-by-week curricula for:

- Systems Administrators → AI Systems Orchestrators

- Database Administrators → ML Data Architects

- Network Engineers → Intelligent Network Designers

- Security Analysts → AI Security Specialists

- DevOps Engineers → MLOps Platform Engineers

- Cloud Architects → AI Cloud Architects

- Help Desk → AI Support Specialists

- IT Managers → AI Transformation Leaders

- Plus 7 additional specialized roles

Each curriculum includes:

- Weekly learning objectives with specific outcomes

- Curated LinkedIn Learning courses with exact timings

- Hands-on exercises using real tools

- Progress checkpoints and success metrics

- Role-specific AI platforms and tools

- Community engagement activities

- Industry certification pathways

- Peer learning group templates

If you want to do an assessment and get tailored learning advise? Run the learning assessment

Remember: Every expert was once a beginner. Every transformation starts with a single step. That includes the people who wrote this guide.

Your journey toward AI-augmented IT Operations begins with whatever small action you take after reading this. Make it count.